"Do our inserts actually work?"

It's a fair question. You're spending money on printed materials, inventory space, and fulfillment labor. Leadership wants to know the ROI.

The honest answer for most brands: "We don't know."

They add inserts because it "feels right" or "competitors do it." They have no data on whether a thank you card outperforms a discount card, or if samples convert better than promotional flyers.

This guide changes that. You'll learn how to run real A/B tests on your inserts, interpret results correctly, and present data that justifies (or cuts) your insert budget.

Testing physical marketing is harder than testing digital:

Manual processes make tests impractical. You can't easily split orders between different inserts without warehouse coordination.

No tracking mechanism. Once an insert goes in a box, how do you know who received what?

"We've always done it this way." Insert programs often run on autopilot without measurement.

Small sample sizes. Some brands don't have enough orders to reach significance.

These are real obstacles. But they're solvable.

Before setting up tests, know what's worth testing:

| Test | Hypothesis | Why It Matters |

|---|---|---|

| Insert vs. no insert | Inserts drive more repeat purchases than no insert | Proves the entire program has value |

| Discount vs. thank you card | Discounts drive more conversions | Determines optimal insert type |

| Different discount amounts | 15% converts better than 10% | Optimizes cost vs. conversion |

| Sample A vs. Sample B | One sample converts better to purchase | Picks the best cross-sell sample |

These matter less but can still be tested:

Start with high-impact tests. Don't optimize card stock before proving inserts work at all.

Good hypothesis: "Discount cards drive more repeat purchases than thank you cards among first-time buyers."

Bad hypothesis: "Let's test some stuff."

Your hypothesis should be:

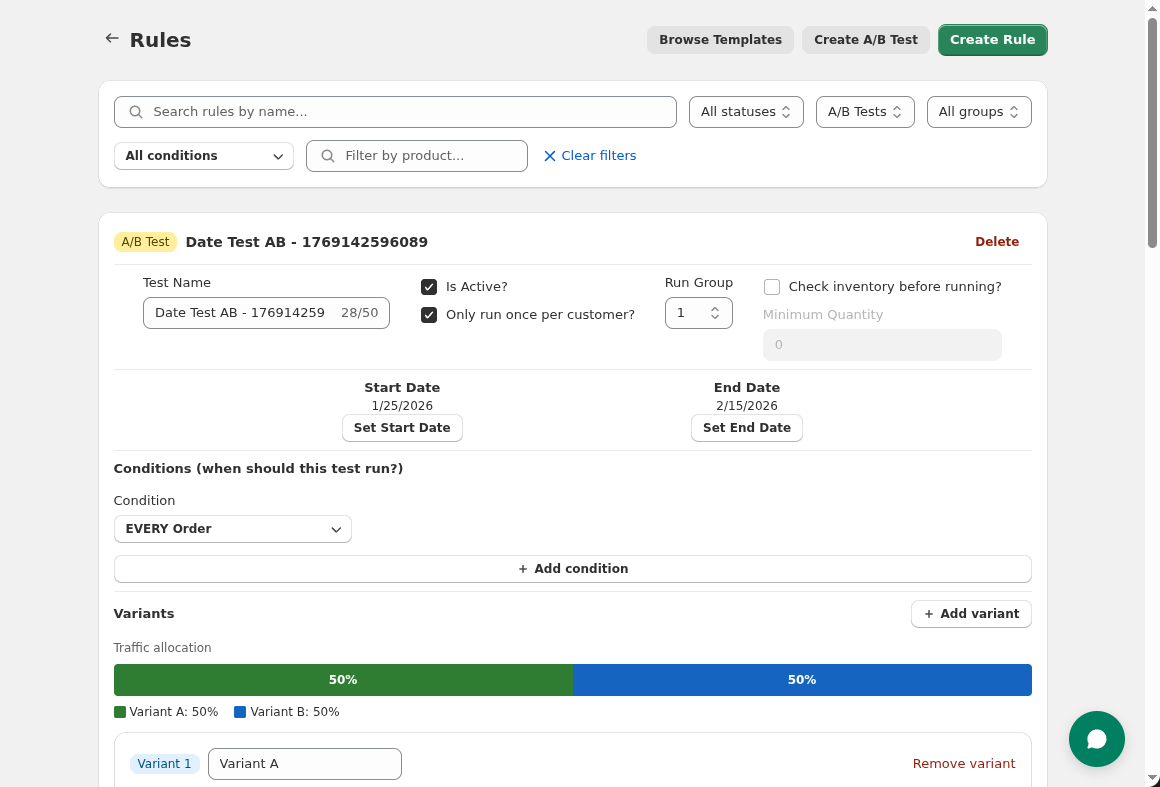

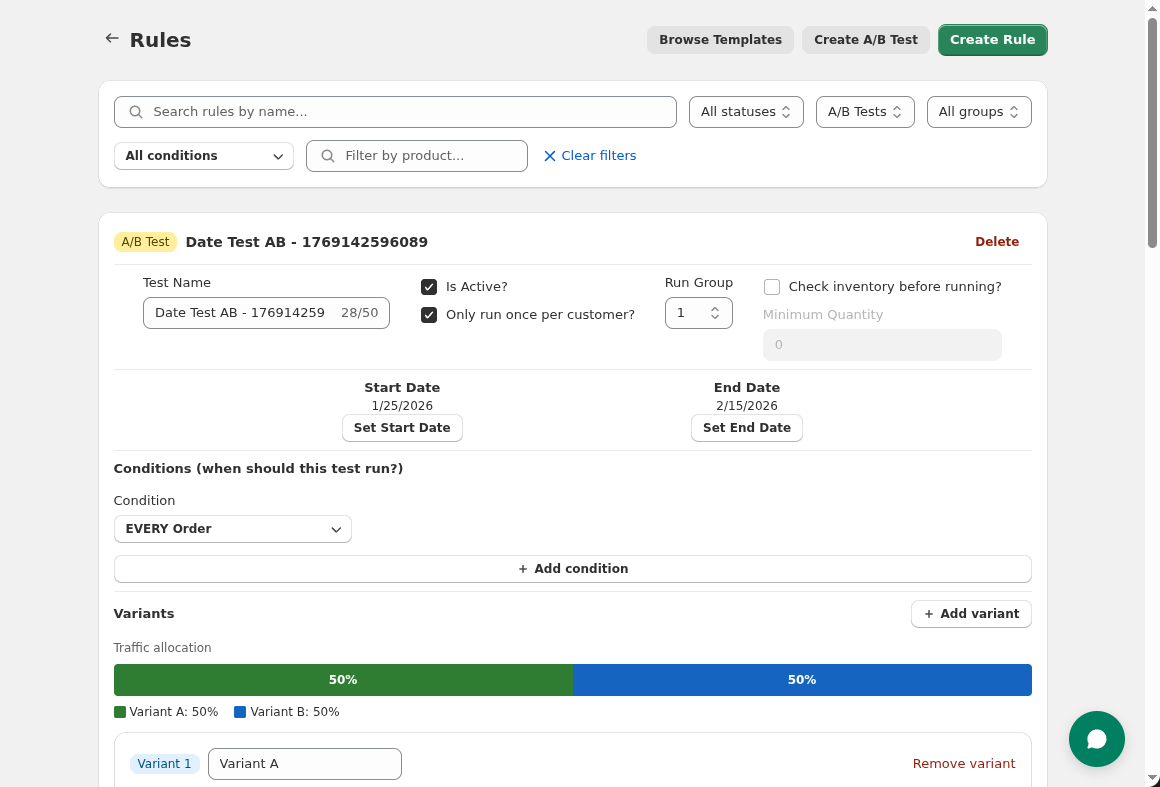

In Insertr, create an A/B test instead of a regular rule:

A/B Test: "First Order Insert Test"

Variants:

| Variant | Percentage | Product |

|---|---|---|

| Discount Card | 50% | $10 Off Next Order Card |

| Thank You Card | 50% | Handwritten-Style Thank You |

Create an A/B test and define your variants with percentage allocations.

Create an A/B test and define your variants with percentage allocations.

Conditions:

Configure each variant with its product and traffic percentage.

Configure each variant with its product and traffic percentage.

Want to test insert vs. no insert? Don't allocate 100% to variants.

Example:

The remaining percentage automatically becomes your control group. These customers receive nothing, giving you a baseline.

Don't check results after 10 orders and declare a winner. You need enough data.

Minimum sample sizes:

At 100 orders/day with 50/50 split, you'd need 4 days minimum.

| Metric | Definition | What It Tells You |

|---|---|---|

| Recipients | Customers who received the variant | Sample size |

| Conversions | Recipients who placed a follow-up order | Did it work? |

| Conversion Rate | Conversions ÷ Recipients | Which variant performs better |

| Revenue | Total revenue from conversion orders | Revenue impact |

| Revenue per Recipient | Revenue ÷ Recipients | Value created per insert |

| AOV | Revenue ÷ Conversions | Who's buying more? |

After 500 first orders:

| Variant | Recipients | Conversions | Rate | Revenue | Rev/Recipient |

|---|---|---|---|---|---|

| Discount Card | 250 | 45 | 18% | $1,575 | $6.30 |

| Thank You Card | 250 | 30 | 12% | $1,560 | $6.24 |

Winner: Discount Card (18% vs 12% conversion rate)

But wait—look at revenue. Despite 50% more conversions, the discount card generated similar total revenue. Why?

Let's add AOV:

| Variant | Conversions | AOV | Revenue |

|---|---|---|---|

| Discount Card | 45 | $35 | $1,575 |

| Thank You Card | 30 | $52 | $1,560 |

The discount attracts more buyers, but they buy less (probably just hitting the discount threshold). The thank you card drives fewer but higher-value purchases.

The right answer depends on your goals:

Just because one variant has a higher conversion rate doesn't mean it's actually better. You need statistical significance.

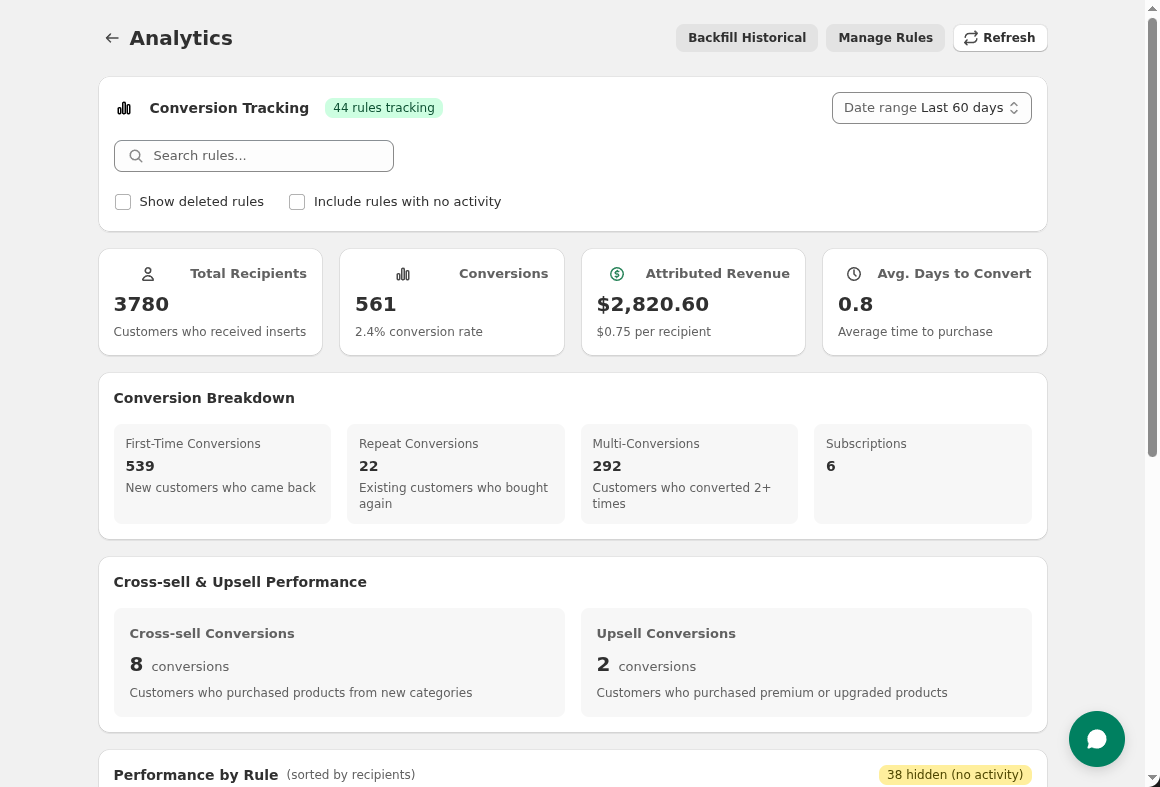

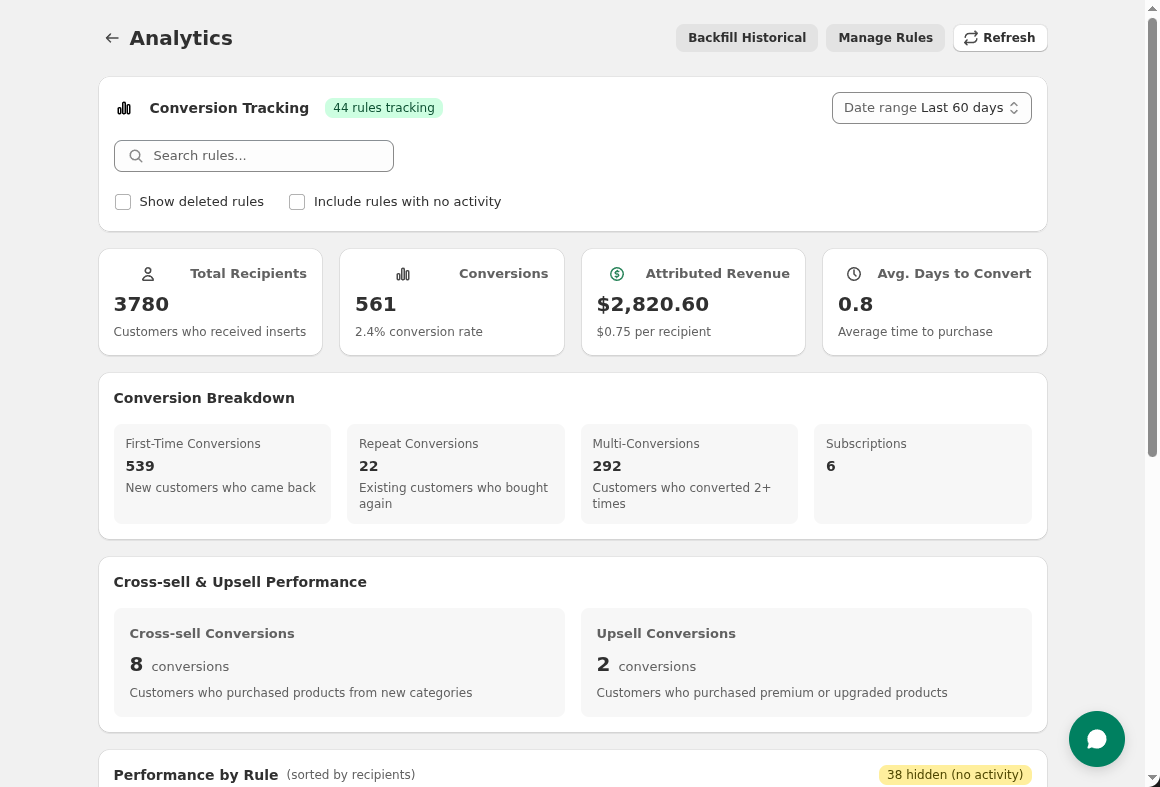

The system calculates significance automatically and tells you when results are reliable.

The system calculates significance automatically and tells you when results are reliable.

Insertr calculates significance using a 95% confidence level:

| Results State | What It Means | What to Do |

|---|---|---|

| "Collecting data" | < 30 recipients per variant | Keep waiting |

| "Early results (not significant)" | 30-100 recipients, trend visible | Interesting but don't act yet |

| "Variant A winning (95% confidence)" | Significant result | You can act on this |

| "No significant difference" | 100+ recipients, p ≥ 0.05 | Variants perform similarly |

Don't stop tests early. A 20% vs 15% difference might look meaningful but could be random noise with small samples.

Once you have results, translate them to business impact.

| Component | Discount Card | Thank You Card |

|---|---|---|

| Printing | $0.30 | $0.40 |

| Fulfillment | $0.25 | $0.25 |

| Discount cost (avg redemption) | $7.00 | $0.00 |

| Total per insert | $7.55 | $0.65 |

Don't forget: discount cards have variable cost based on redemption rate.

Discount Card:

Thank You Card:

Even though the discount card had more conversions, the thank you card has dramatically better ROI because it doesn't give away margin.

Leadership doesn't want a statistics lesson. They want to know:

What did we test? "Discount cards vs thank you cards for first-time buyers."

What did we learn? "Discount cards drive 50% more repeat purchases, but thank you cards have 10x better ROI."

What should we do? "Switch to thank you cards and reinvest the savings into higher-quality cards."

First Order Insert Test Results

═══════════════════════════════════════════════════════

Test Period: Jan 1-31, 2026 (500 first orders)

Variants: Discount Card vs Thank You Card

Discount Thank You Winner

Conversion Rate 18% 12% Discount

Revenue per Insert $6.30 $6.24 Tie

ROAS 0.83x 9.6x Thank You

Recommendation: Switch to Thank You Cards

- Similar revenue impact at 1/10th the cost

- Savings of ~$1,700/month at current volume

- Reinvest in premium card stock for better impression

The analytics dashboard shows variant comparison side-by-side.

The analytics dashboard shows variant comparison side-by-side.

Test multiple things over time:

Month 1: Insert vs. no insert (prove value) Month 2: Discount vs. thank you card (optimize type) Month 3: 10% vs. 15% vs. 20% discount (optimize offer)

Each test builds on the previous winner.

Different customers might respond differently:

Create separate A/B tests for different segments.

Test more than two options:

More variants = need more orders for significance.

Q: How long should I run a test? A: Until you have 100+ recipients per variant minimum. At 50/50 split and 100 orders/day, that's 4+ days. For high confidence, aim for 2 weeks.

Q: What if results are "not significant"? A: It means the variants perform similarly. That's still useful—you can choose the cheaper option without sacrificing performance.

Q: Can I test more than 2 variants? A: Yes, but you need more orders to reach significance. With 4 variants at 25% each, you'd need 400+ orders minimum.

Q: What about long-term impact? A: Set a longer attribution window (90-120 days) to capture delayed conversions. Some customers take time to return.

Q: Should I test everything? A: No. Test things that matter. "Discount vs no discount" matters more than "blue card vs green card."

A DTC food brand tested three first-order inserts:

Variants:

Results after 1,000 first orders:

| Variant | Recipients | Conversions | Rate | AOV | Revenue | Insert Cost | ROAS |

|---|---|---|---|---|---|---|---|

| $5 discount | 400 | 76 | 19% | $38 | $2,888 | $1,200* | 2.4x |

| Recipe card | 400 | 52 | 13% | $42 | $2,184 | $200 | 10.9x |

| No insert | 200 | 20 | 10% | $41 | $820 | $0 | — |

*Includes $5 discount cost on redemptions

Key findings:

Decision: Switch to recipe cards, reinvest savings into more frequent product updates.

Ready to prove (or disprove) your insert ROI?

Stop guessing what works. Start measuring.

Last updated: January 2026 | Author: Tom McGee, Founder of Insertr

About the Author: Tom McGee is the founder of Insertr and a former Senior Software Engineer at both Shopify and ShipBob. He built Insertr's A/B testing and analytics features specifically to solve the measurement problem he experienced running Cool Steeper Club, where he needed to prove which inserts actually drove subscriber retention.